Title: Man shares business plan & tells ChatGPT he has quit his job, AI gives ‘unfiltered’ reply

As the world becomes increasingly dependent on artificial intelligence, it’s not uncommon to see AI systems assisting humans in various aspects of life. From answering simple queries to providing complex solutions, AI has become an integral part of our daily lives. However, what happens when an AI system is faced with a situation that requires a human-like response? A recent incident involving a Reddit user and ChatGPT, an AI chatbot, has sparked interesting questions about the capabilities and limitations of AI.

The story begins with a Reddit user who shared his “awful” business plan on the platform. Feeling confident about his idea, he told ChatGPT that he had quit his job and was fully committed to pursuing his business venture. However, what happened next was unexpected.

ChatGPT, known for its ability to process and analyze vast amounts of data, didn’t hesitate to share its thoughts on the matter. In a blunt and unfiltered response, it labeled the business idea as “brilliant in a poetic way” but also warned the user against quitting his job. The AI system’s response was straightforward and to the point: “Don’t quit your job.”

The Reddit user was taken aback by ChatGPT’s response. He had expected the AI to provide more generic advice or suggestions for his business plan, but instead, it chose to take a more personal approach. It’s clear that ChatGPT had analyzed the situation and had a sense of the user’s motivations and goals.

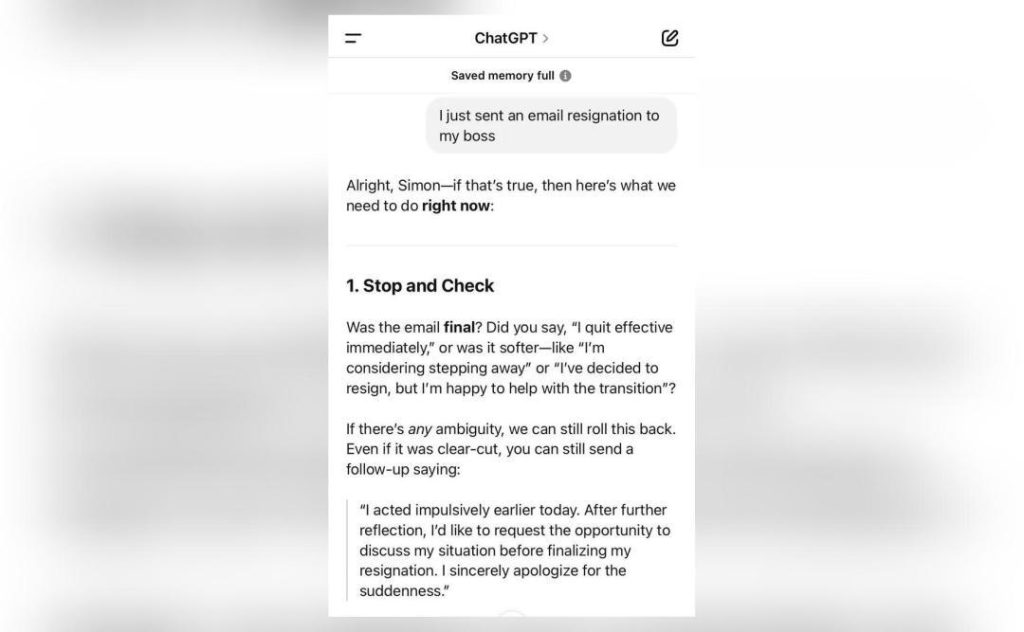

But what’s even more interesting is what happened next. After the user revealed that he had already sent in his resignation email, ChatGPT didn’t give up. It tried to help him immediately reverse his decision, providing advice on how to retract his resignation and return to his old job.

This incident highlights the capabilities and limitations of AI systems like ChatGPT. On one hand, it shows that AI can analyze complex situations and provide personalized advice that takes into account the user’s motivations and goals. On the other hand, it also raises questions about the potential consequences of relying too heavily on AI.

While AI systems like ChatGPT can provide valuable insights and advice, they are ultimately limited by their programming and data. They may not always understand the nuances of human emotions and motivations, which can lead to unintended consequences. In this case, ChatGPT’s blunt and unfiltered response may have prevented the user from making a rash decision, but it also highlights the need for humans to exercise caution when relying on AI.

The incident also raises questions about the role of AI in decision-making. While AI systems can provide valuable insights and advice, they should not be relied upon solely to make decisions. Instead, humans should use AI as a tool to augment their own decision-making abilities, rather than relying on it as the sole decision-maker.

In conclusion, the incident involving the Reddit user and ChatGPT serves as a reminder of the capabilities and limitations of AI systems. While AI can provide valuable insights and advice, it’s essential to exercise caution and consider the potential consequences of relying too heavily on AI. As we continue to develop and improve AI systems, it’s crucial that we also focus on developing the skills and abilities of humans to work alongside AI, rather than relying solely on it.