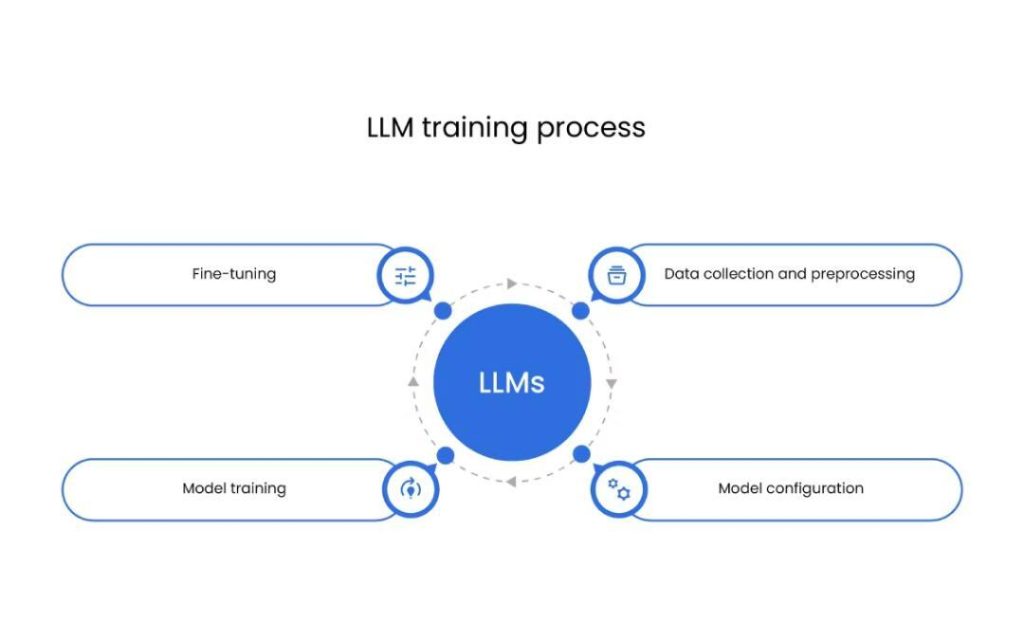

How do you train an LLM from scratch?

Large Language Models (LLMs) have revolutionized the field of natural language processing, enabling applications such as language translation, text summarization, and chatbots. Behind these impressive tools lies a complex process of training an LLM from scratch. In this blog post, we’ll delve into the intricacies of training an LLM, exploring the massive datasets, powerful GPUs, and machine learning techniques required to bring these language giants to life.

What is an LLM?

Before diving into the training process, it’s essential to understand what an LLM is. An LLM is a type of artificial neural network that is trained on vast amounts of text data to generate human-like language understanding and generation capabilities. LLMs are typically trained on a combination of text from books, code, and the web, which allows them to learn patterns, relationships, and contexts within language.

Step 1: Data Collection

The first step in training an LLM is collecting a massive dataset of text. This dataset serves as the foundation for the model’s learning process, providing the examples it needs to recognize patterns and relationships within language. The dataset should be diverse, covering a wide range of topics, styles, and genres.

There are several ways to collect this data, including:

- Web scraping: Gathering text from websites, forums, and social media platforms.

- Book datasets: Using datasets of e-books, articles, and academic papers.

- Code datasets: Collecting code snippets and documentation from various programming languages.

- User-generated content: Gathering text from social media platforms, online forums, and user-generated content.

Step 2: Preprocessing

Once the dataset is collected, it needs to be preprocessed to prepare it for training. This involves several steps:

- Tokenization: Breaking down text into individual words or tokens.

- Stopword removal: Removing common words like “the,” “and,” and “a” that don’t add much value to the meaning of the text.

- Stemming or Lemmatization: Reducing words to their base form (e.g., “running” becomes “run”).

- Normalization: Converting all text to lowercase and removing punctuation.

Step 3: Model Architecture

The next step is designing the architecture of the LLM. This involves choosing the type of neural network, the number of layers, and the size of the hidden states. The architecture should be carefully designed to accommodate the complexity of the task and the size of the dataset.

Common architectures used for LLMs include:

- Recurrent Neural Networks (RNNs): Suitable for sequential data like text.

- Transformers: Designed specifically for natural language processing tasks.

- Bidirectional Encoder Representations from Transformers (BERT): A popular architecture that uses a multi-layer bidirectional transformer encoder.

Step 4: Training

With the dataset preprocessed and the model architecture designed, it’s time to start training the LLM. This involves feeding the dataset into the model, adjusting its weights through machine learning, and optimizing the loss function.

The training process typically involves the following steps:

- Data batching: Splitting the dataset into smaller batches to improve training efficiency.

- Optimizer selection: Choosing an optimizer that adapts to the learning rate and adjusts the model’s weights.

- Loss function selection: Defining the loss function that measures the difference between the model’s predictions and the true labels.

- Gradient descent: Updating the model’s weights based on the gradients of the loss function.

Step 5: Hyperparameter Tuning

Hyperparameter tuning is a critical step in the training process. Hyperparameters include settings like the learning rate, batch size, and number of epochs. These settings can significantly impact the model’s performance, and it’s essential to find the optimal combination.

Common hyperparameter tuning techniques include:

- Grid search: Exhaustively searching through a grid of possible hyperparameters.

- Random search: Randomly sampling hyperparameters and evaluating their performance.

- Bayesian optimization: Using a probabilistic approach to search for the optimal hyperparameters.

Step 6: Evaluation and Deployment

Once the LLM is trained and hyperparameter tuned, it’s essential to evaluate its performance on a test dataset. This involves measuring metrics like accuracy, precision, and recall to ensure the model is functioning as expected.

Finally, the trained LLM can be deployed in a production environment, where it can be used for tasks like language translation, text summarization, and chatbots.

Challenges and Considerations

Training an LLM from scratch is a complex and resource-intensive process. Some of the challenges and considerations include:

- Computational resources: Training an LLM requires powerful GPUs and significant computational resources.

- Data quality: The quality of the dataset can significantly impact the model’s performance, and it’s essential to ensure the dataset is diverse, accurate, and representative.

- Safety alignment: LLMs can generate harmful or offensive content, and it’s essential to implement safety alignment methods to ensure the model produces respectful and responsible output.

- Interpretability: LLMs can be difficult to interpret, and it’s essential to implement techniques like attention mechanisms and saliency maps to understand the model’s decision-making process.

Conclusion

Training an LLM from scratch is a complex process that requires significant computational resources, a massive dataset, and careful consideration of the model architecture, hyperparameters, and safety alignment. While it’s a challenging task, the rewards are significant, as LLMs have the potential to revolutionize the field of natural language processing and enable applications like language translation, text summarization, and chatbots.

Source: