Training LLMs: From Data Prep to Fine-Tuning

Large Language Models (LLMs) have revolutionized the field of natural language processing, enabling applications such as language translation, text summarization, and chatbots. However, training these models requires a deep understanding of the underlying processes and techniques. In this blog post, we will explore the steps involved in training LLMs, from data preparation to fine-tuning, and highlight best practices to ensure the accuracy and usability of these models.

Data Preparation: The Foundation of LLM Training

The first step in training an LLM is to prepare a large, high-quality dataset. This dataset serves as the foundation of the model’s learning process and is used to train the model’s weights. The quality of the dataset has a direct impact on the accuracy and reliability of the model.

To ensure the dataset is of high quality, it is essential to:

- Curate relevant data: The dataset should be relevant to the specific use case or domain of the LLM. This ensures that the model learns to recognize patterns and relationships specific to that domain.

- Clean and preprocess data: The data should be cleaned and preprocessed to remove any errors, inconsistencies, or irrelevant information. This includes tasks such as tokenization, stemming, and lemmatization.

- Remove bias: The dataset should be curated to avoid bias, which can lead to inaccurate or unfair results. This includes removing any sensitive or offensive content and ensuring that the dataset represents a diverse range of perspectives and viewpoints.

Model Selection and Architecture Design

Once the dataset is prepared, the next step is to select a suitable model architecture and design. This involves:

- Choosing a suitable architecture: The architecture should be designed to fit the specific requirements of the use case or domain. For example, a transformer-based architecture may be suitable for tasks such as language translation, while a recurrent neural network (RNN) may be more suitable for tasks such as text summarization.

- Designing the model’s layers: The model’s layers should be designed to process the input data in a way that is relevant to the specific task. For example, a character-level language model may use a recurrent neural network (RNN) to process the input data, while a word-level language model may use a transformer-based architecture.

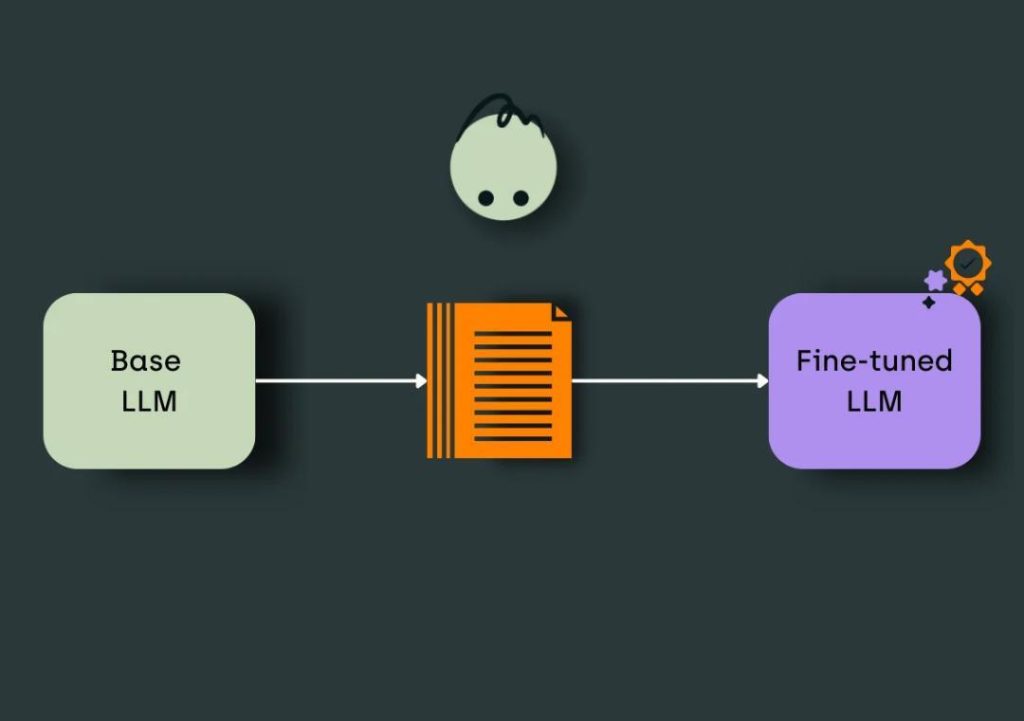

Fine-Tuning: Adjusting Weights for Domain Expertise

Once the model architecture is designed, the next step is to fine-tune the model’s weights. Fine-tuning involves adjusting the model’s weights to specialize it for a specific domain or use case. This is essential for achieving high accuracy and usability in real-world applications.

Fine-tuning can be achieved through various techniques, including:

- Transfer learning: This involves using a pre-trained model as a starting point and fine-tuning its weights for a specific domain or use case.

- Domain adaptation: This involves adjusting the model’s weights to adapt to a specific domain or use case.

- Task-specific training: This involves training the model on a specific task or dataset to adjust its weights for that task.

Reducing Compute Costs with Techniques like LoRA

Fine-tuning can be computationally expensive, especially for large language models. To reduce compute costs, various techniques can be used, including:

- Low-Rank Adaptation (LoRA): This involves decomposing the model’s weights into a low-rank matrix and an arbitrary matrix. This reduces the number of parameters and computations required during fine-tuning.

- Pruning: This involves removing unnecessary or redundant neurons and connections from the model. This reduces the number of computations required during fine-tuning.

- Quantization: This involves reducing the precision of the model’s weights and activations. This reduces the memory and computations required during fine-tuning.

Validation against Real-World Prompts

Once the model is fine-tuned, the next step is to validate its performance against real-world prompts. This involves testing the model on a diverse range of inputs and evaluating its performance on tasks such as language translation, text summarization, and chatbots.

Validation is essential for ensuring that the model is accurate and usable in real-world applications. It helps to identify any biases or errors in the model and ensures that it can handle a diverse range of inputs and tasks.

A Strong Feedback Loop for Continuous Improvement

The final step in training an LLM is to establish a strong feedback loop for continuous improvement. This involves:

- Collecting new data: The model should be trained on new data and fine-tuned to adapt to changing patterns and relationships in the data.

- Evaluating performance: The model’s performance should be evaluated on a regular basis to identify any biases or errors.

- Refining the model: The model should be refined and updated based on the results of the evaluation.

By establishing a strong feedback loop, the model can be continuously improved and refined to ensure its accuracy and usability in real-world applications.

Conclusion

Training Large Language Models requires a deep understanding of the underlying processes and techniques. From data preparation to fine-tuning, each step is essential for ensuring the accuracy and usability of the model. By following the best practices outlined in this blog post, developers can train high-quality LLMs that can be used in a wide range of applications.

Sources: